Algorithm | Degeneracy at NL_CL #2: Flesh

By Naoto Hieda

NL_CL #2: Flesh took place on May 24th, 2020 by Instrument Inventors Initiative (iii). While originally planned at iii’s workspace in the Hague, we adapted an online streaming format to react to the current situation. NL_CL is a series by Netherlands Coding Live focusing on live-coding performances. Every time, they curate performances based on a specific topic; the theme of the first edition was “The Future of Music” and this time was “Flesh”. Three performances are curated by iii’s resident artist Joana Chicau, and the event was facilitated by Marije Baalman. Performances include “Algorithm | Degeneracy” by Naoto Hieda, “Anatomies of Intelligence” by Joana Chicau & Jonathan Reus, and “Radio-active Monstrosities” by Angeliki Diakrousi. You can find my performance in the video below:

In this article, I would like to explain the background of my performance.

I previously wrote information about my performance in this article, when I did the first showing in a physical space. The concept of this work is to show how I, as an Asperger’s, see multimodal information in a continuous space. I initially found this concept during the machine learning literacy workshop at School for Poetic Computation in 2018. Last year, in 2019, I started practices to embody this concept in order to, eventually, create an artwork. At the Choreographic Coding Lab in Mainz in May, 2019, I created a prototype to bridge movement and live coding:

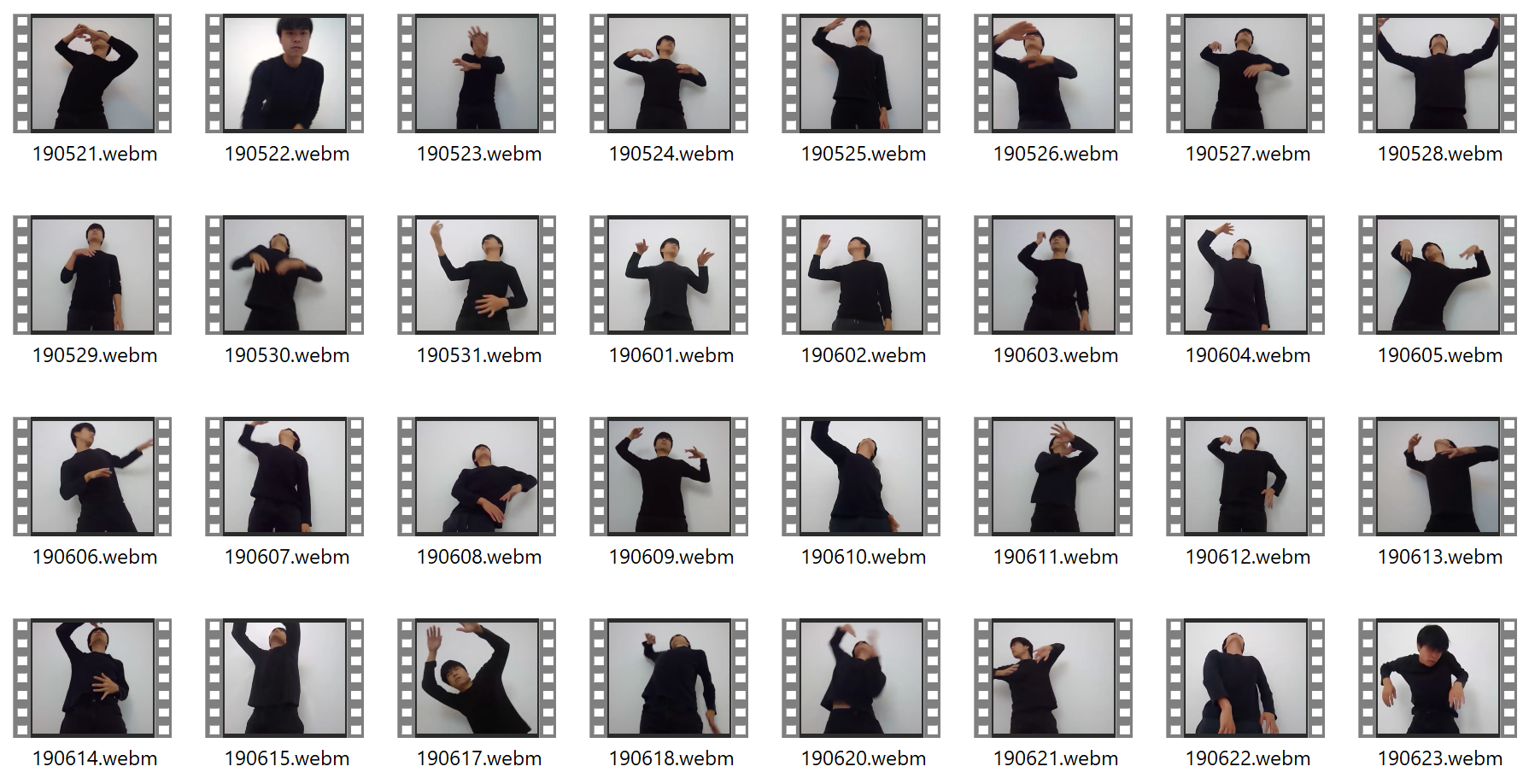

In the image and video, a webcam feed is input to a Processing program that I can live-code using a real-time JavaScript interpreter. The concept is to do a structured improvisation interpreting a word through movements and code. However, I found that conveying the idea through movements and code is challenging. At that time, I focused on developing the software and not practicing movements. From May to August, 2019, I did a daily practice to record a 30-second dance clip in which I move inspired by a set of words that I came up during a short meditation. This practice helped me to relate words (which are often a combination of a tangible object and an emotion) to movements as well as to have a vocabulary of movements.

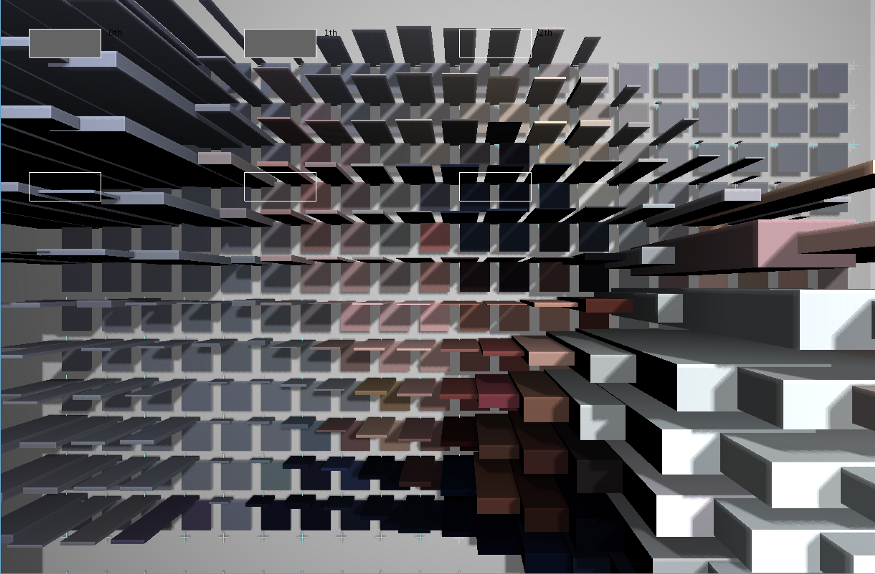

Meanwhile, I started to be interested in gallery spaces. The art market and visual artists seem to be still obsessed with a white cubic space. I visited Jonathan Monk’s exhibition at Kindl in Berlin, which exhibits artworks along with photographs of past exhibitions, creating an illusion of exhibitions inside an exhibition (and these photos also show photos of the previous exhibitions). At the same time, I was impressed by ofxWFC3D, a generative approach to architecture, and I created a demo to show p5.js sketches inside a generative, virtual gallery space.

virtual exhibition space - #processing sketches running in a processing sketch @shiffman @thecodingtrain @ProcessingOrg pic.twitter.com/27xz1I0daq

— Naoto HIÉDA (@naoto_hieda) March 18, 2019

Eventually, these two prototypes became building blocks of a performance. My movements are exhibited in the virtual gallery space, which is the concept of the performance “Algorithm | Degeneracy”. Nevertheless, I needed to add two more layers. One is to replace the live-coding system that I already created. The output of live-coding was only visual and requires myself to sit down to code, which is not suitable for a live performance. I forked p-code, a simple live-coding language for sound synthesis for the performance. Sound complements the visual aspect of the movements, and what is more, p-code allows any text as a code. Although there is a syntax, there is not syntax error in p-code. This is ideal because as a performer, I can use code both for live-coding and for communicating with the audience. In the first showing in a physical space, I showed the p-code editor and a virtual gallery space side-by-side. For the live streaming at NL_CL, I embedded the p-code engine within the virtual gallery so that the codes show up in the space, walking through the audience.

The other layer that I added is to highlight the process of creating dance videos. Inspired by Jonathan Monk taking a meta-approach to an exhibition, I did not want to recreate a gallery space merely to exhibit works online. During the showing in a physical space, I simply Google-searched images and projected on the wall behind me before starting video recording. I had to create a small program from scratch for chroma keying to trim the green screen and overlay my body on a virtual background. The composited video is then uploaded on the virtual gallery to be exhibited. This entire process is part of the performance, and an exhibition is ready to be open at the end of the performance.

You can visit the gallery from this link (sound will play when you enter).

Thank you to everyone who watched the performance. And I would like to thank Joana Chicau and Marije Baalman for inviting me and organizing the event and Jonathan Reus for the technical setup. Also I thank the seminar group at KHM for giving me valuable feedback.